Prologue

Today is the day!

Months of checking your spam folder are finally over!

You have just received an email from OpenAI, granting you access to build on top of their API!

At long last, you can finally begin working on that idea for an app powered by OpenAI.

Excited at the prospect of developing with one of the world’s most advanced natural language models, you open up the playground and build your first prompt as a way to test the service.

Your first request gets sent in. Seconds later, a result comes back.

Reading the result, with a slight grin on your face, you note, ‘That’s interesting. Not exactly what I had in mind. I wonder what the model would come back with if I did this?’

A few prompt tweaks and requests later, you start seeing better quality results. Your overall satisfaction with the results is on the rise.

Noting that you can build more efficiently in a Jupyter notebook, you make a decision to export the python prompt settings from the playground and make the switch to one of your favourite early development environments.

And as part of this process, you begin to make observations and rate your satisfaction with each result returned by the model.

‘A Test and Learn approach to developing on OpenAI’, you ponder. ‘I wonder what that could look like?’

A Data Driven Approach to Early Stage Development

This article is a first attempt to build a test and learn process around developing on OpenAI’s GPT-3 natural language model. Think of the ideas presented in this article as an open exploration for how we can take a more scientific approach to our earliest stages of development with GPT-3.

That being said, developing with this powerful API is unlike any other that I’ve experienced, which is fascinating in its own sense. Traditionally, when making requests to an API, you follow strict rules and responses are well defined.

When we first start developing an idea that utilizes GPT-3, we should assume that we don’t really know what the model’s response will be. We can only build inputs that work towards producing optimal desired outputs.

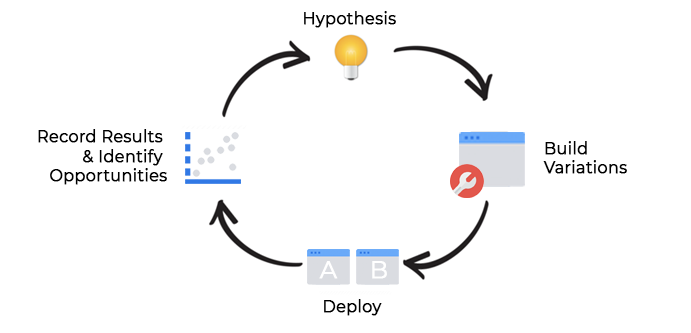

In a way, early development with GPT-3 reminds me of A/B testing and this approach borrows heavily from my experiences in that domain.

At a high level, when running an A/B test, we have an idea that we want to explore on our website or app. We develop a hypothesis, build variations, deploy and then record the result and any key learnings before generating further refinements to our hypothesis. And the testing cycle then repeats.

When developing early on with GPT-3, the process feels similar.

Start with an idea that you want to explore. Then build a prompt and configure its parameters for the initial request to the API. Make the request, and then take a moment to observe the response. Did the response return what you expected? And as part of your development workflow, you could ask yourself:

- How satisfied am I with the quality of this result? Record that rating on a scale of 1–10

- What could I change to my prompt or settings that could make the output quality better?

- Did I do anything that could have resulted in an unexpected output?

It doesn’t take long at all to record your observations. And by recording this data, hopefully you gain more insight into how to build better quality outputs over time.

So, at the earliest stages of development with GPT-3, focus on understanding how to deliver the highest quality outputs and borrow ideas from the A/B testing process to help you iterate rapidly.

Benefits of this Approach

I log results with the goal of improving output quality over the course of a given project. And once satisfied with the result, I can begin migrating this code over to a web application for broader testing.

I package up information about the details of each request and send them into a Google BigQuery table using pandas_gbq. I’ll walk through how to do this shortly.

The benefits I have experienced to date include:

- A versioned history of all of your requests broken down by project.

- An improved understanding of what works and what doesn’t as a result of recording observations about model output

- Understanding how model output quality has changed over time

- Understanding how prompt engineering efforts have evolved over time

- Estimate costs associated with the development of a particular project based on prompt and response lengths

- Having a database of projects with settings that you can draw on for other OpenAI projects

- Quantify a project’s output quality before migrating code over into a web app

Let’s Get Started

So let’s get started and I’ll walk through some code for how to capture details about your early stage GPT-3 development efforts. I love using a notebook at the earliest stages of development as it enables me to rapidly iterate and experiment with various inputs.

If you’d like to have a copy of the Jupyter notebook, just fill out the form at the bottom of this article. Else, just copy and paste as you go through this article and run it yourself 🙂

Requirements for this Article

As part of this article, I’m going to assume that you may have some crafty python skills. But, in the case that you don’t, I’ve tried to be as detailed as possible in documenting how this code works and included some links to help you get started. Here’s what you’ll need:

- An OpenAI Key: if you don’t have on, you’ll need to apply for one here.

- Python knowledge

- Jupyter Notebook or Google Colab Experience

- Access to Google BigQuery

- How to create a service account for Google Cloud

So let’s begin!

A Test and Learn Approach using Jupyter Notebooks

Import Modules

The first thing we need to do is import the following modules.

# Import Modules

import os

import openai

import pandas as pd

import pandas_gbq as gbq

from google.oauth2 import service_account

import datetimeProject Details

Choose a name for your project and set a project goal.

# Name your project and define a goal

project_name = "openai_test_and_learn"

project_goal = "Build a test and learn framework around developing on the OpenAI GPT-3 API"Credentials

Let’s use some environment variables to store our OpenAI and Google Cloud Platform keys. If you have never used environment variables before, here’s a helpful article about how to get started when using Anaconda environments.

At this stage, we’ll also need to set our GCP Project ID, set our destination BQ table and initialize our GCP service account credentials.

# OpenAI Key

openai.api_key = os.environ.get('OPENAI_KEY')

# Google BigQuery Settings - key, GCP project id

gcpkey = os.environ.get('GCP_KEY')

gcp_projectid = os.environ.get('GCP_PROJECT_ID')

# The BigQuery table where we'll send and log our data

bq_table = 'openai.test_learn_demo'

# BigQuery Service Account Credentials

credentials = service_account.Credentials.from_service_account_file(gcpkey)Next, let’s create an object based on the the data we just defined above. We’ll pass this into a function which we’ll use to send our data to BigQuery.

# Project details which we'll use when sending data to BQ

project_details = {

"project_name": project_name,

"project_goal": project_goal,

"gcp_key": gcpkey,

"gcp_project_id": gcp_projectid,

"bq_table": bq_table,

"gcp_credentials":credentials

}Create a Prompt function

The first function we will create is called ‘build_prompt’.

I like creating a function for my prompts because I tend to dynamically build them or include data into my prompt as user generated content.

I’m going to build a simple prompt that will generate a few opening lines to a short story about exploring the moon. But feel free to use your own prompt design that this stage.

def build_prompt(user_input):

'''

Background: This function takes the parameter user_input and incorporates it into the prompt.

Params:

user_input(string): User generated input that we will pass into the prompt. In this case, it will be the start of our story - Once upon a time

Returns:

prompt(string): The prompt that includes user_input and will be sent along with the GPT-3 request

'''

# The first line describes what we want GPT-3 to do.

prompt = f"""Create a story about exploring the moon.

{user_input}"""

return promptCreate a function that logs our GPT-3 inputs and outputs

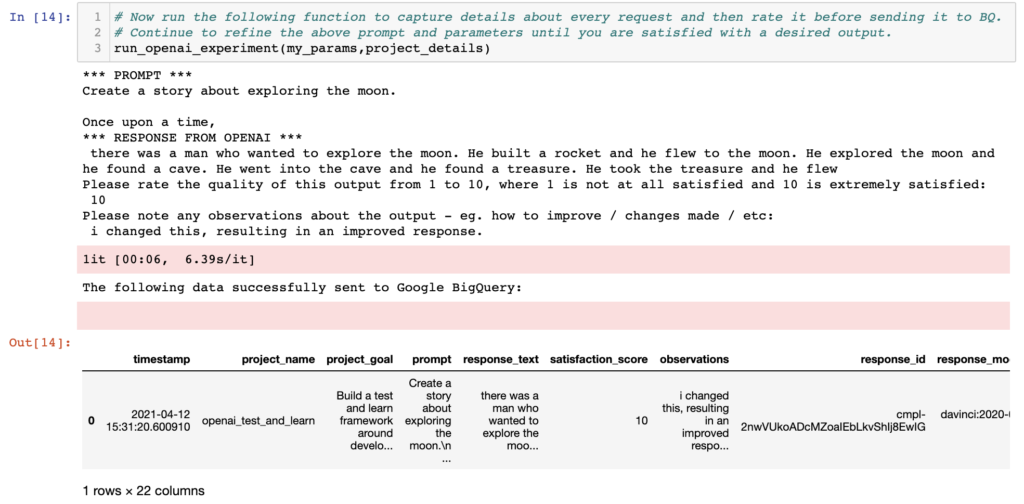

The next function we will create is called ‘run_openai_experiment’.

This function does the following:

- Captures all details about the request and response from GPT-3

- Prompts the user to rate the response on a scale of 1–10

- Prompts the user to record any observations

- Creates a dataframe of details before sending it to Google BigQuery.

I’m not going to go into detail about each model parameter used in the request, and instead will link to this great guide detailing GPT-3 model parameters.

Here’s the function:

def run_openai_experiment(params, project_details):

'''

Background: This function captures all details about a request to the OpenAI API and sends this data into Google BigQuery

Params:

params (object): Contains all of the parameters used in an OpenAI GPT-3 request

project_details (object): Contains all of the details related to BigQuery settings and our Project Settings

Returns:

df: A dataframe with details about the request + response

'''

# Create a Timestamp

timestamp = datetime.datetime.now()

# Print Prompt

print('*** PROMPT ***')

print(params['prompt'])

# Make a request to OpenAI using the selected params

response = openai.Completion.create(

engine=params['engine'],

prompt=params['prompt'],

max_tokens=params['max_tokens'],

temperature=params['temperature'],

top_p=params['top_p'],

frequency_penalty=params['frequency_penalty'],

presence_penalty=params['presence_penalty'],

n=params['n'],

stream = params['stream'],

logprobs=params['logprobs'],

stop = params['stop']

)

# Parse out the response

response_text = response.choices[0]['text']

response_id = response['id']

response_model = response['model']

response_object = response['object']

# Display the output to the user

print('*** RESPONSE FROM OPENAI ***')

print(response_text)

# Prompt satisfaction question

satisfaction_score = input('Please rate the quality of this output from 1 to 10, where 1 is not at all satisfied and 10 is extremely satisfied:\n ')

# Record observations about the output

observations = input("Please note any observations about the output - eg. how to improve / changes made / etc:\n ")

# Gather all of our data about the initial request, response and our observations

data = {

'timestamp':timestamp,

'project_name': project_details['project_name'],

'project_goal': project_details['project_goal'],

'prompt': params['prompt'],

'response_text': response_text,

'satisfaction_score':satisfaction_score,

'observations':observations,

'response_id': response_id,

'response_model': response_model,

'response_object': response_object,

'response_length': len(response_text),

'prompt_length': len(params['prompt']),

'engine':params['engine'],

'max_tokens':params['max_tokens'],

'temperature':params['temperature'],

'top_p':params['top_p'],

'frequency_penalty':params['frequency_penalty'],

'presence_penalty':params['presence_penalty'],

'n':params['n'],

'stream':params['stream'],

'logprobs':params['logprobs'],

'stop':params['stop']

}

# Create a dataframe based on data

df = pd.DataFrame([data])

# Define settings to send data into Google BigQuery

bq_table = project_details['bq_table']

gcp_project_id = project_details['gcp_project_id']

gcp_key = project_details['gcp_key']

credentials = service_account.Credentials.from_service_account_file(gcp_key)

# Send data to Google BigQuery using pandas_gbq

gbq.to_gbq(df, bq_table, gcp_project_id, credentials=credentials, if_exists='append')

print('The following data successfully sent to Google BigQuery:')

return dfLet’s define our user input and GPT-3 Model parameters

Ok. We are nearly there. For our final step, we just need to define a few more things:

- user_input: This will be opening line of the story we want GPT-3 to build on. It will get passed into our build_prompt function.

- model_params: These are the parameters for the request to the OpenAI API

Here’s what this code looks like:

# The opening line to our story we want GPT-3 to build on.

user_input = 'Once upon a time,'

# Let's take user_input and use it to generate a prompt

my_prompt = build_prompt(user_input)Now that we have our prompt built, let’s build our GPT-3 model parameters object. These are the settings we use to fine tune our request to the OpenAI API. For our story example, I’ve used the following settings:

# GPT-3 Request parameters

model_params = {

'engine': 'davinci',

'prompt':my_prompt,

'max_tokens': 50,

'temperature': 1.0,

'top_p': 0.5,

'frequency_penalty': 0,

'presence_penalty': 0,

'n': 1,

'stream': None,

'logprobs': None,

'stop':None

}

Let’s now run it by calling run_openai_experiment

# Pass in GPT-3 model parameters and project details.

run_openai_experiment(model_params,project_details)Reviewing the Output from run_openai_experiment

When you call run_openai_experiment, you’ll see the following outputs generated:

- the prompt that you designed in the build_prompt function

- the response generated from the OpenAI API

- A Satisfaction Rating about the output (which you fill in)

- Observations about the output (which you fill in)

- Whether or not you’ve successfully logged and sent this data to Google BigQuery.

The output should look something like this:

Now let’s optimize and make our output better!

At this point, you have scored your output and probably have some ideas for how to make the results better.

So, based on these ideas to make your outputs better, do the following:

- Tweak your prompt

- Tweak your GPT-3 model parameters

- Adjust your user_input

- and run_openai_experiment again.

Continue testing and rating the output until you are confident and satisfied with your output.

Then the real fun begins…migrating your code into a web app!

Feedback

Thanks for taking the time to read this article. I hope the ideas presented in this article help you generate better quality outputs from GPT-3.

Given that this is an early stage idea, I’d love to hear if you have any feedback about how to improve this process! I’d also be interested in any variations that integrate with other Cloud providers such as AWS or Microsoft Azure. Oh, and last but not least, I’d love some feedback about how you would visualize this BigQuery data in a dashboard!

So, please let me know, comment below or connect with me on LinkedIn.

Thanks again and I look forward to hearing from you!

Comments